Challenges of Multi-Subnet Clients

Articles » Redundant Layer-3-Only Data Center Fabrics » Challenges of Multi-Subnet Clients

Ignoring the application-level challenges of multi-subnet clients, the networking setup used on a client faces the same challenges as the server-side setup. Most importantly, it should ensure symmetrical traffic flow: outgoing traffic should always be sent through the interface having the IP address used in the outgoing packet.

Traffic flow symmetry is desirable property in most deployments, but becomes crucial when:

- Using multipath TCP on devices connected to multiple service providers (some ISPs implement strict checks of source IP addresses to prevent spoofing attacks from their clients);

- Using strict separation of transport networks like SAN-A/SAN-B separation in MPIO iSCSI deployments.

You could use policy-based routing, VRFs, or routing trick to implement consistent outbound interface selection. The first two approaches have been described in the previous section, we’ll use multi-path iSCSI example to describe the typical routing tricks used in iSCSI clients (for example, VMware ESXi).

Example: Multi-Path iSCSI

Imagine the following scenario:

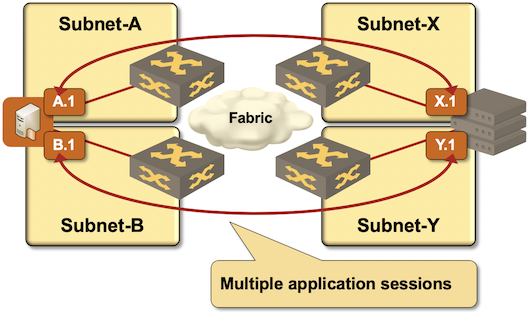

- Servers (iSCSI initiators — clients) and storage arrays (iSCSI targets — servers) are connected to two isolated transport networks (SAN-A, SAN-B);

- SAN-A and SAN-B fabrics are not connected. Servers should use IP addresses in SAN-A to connect to storage array’s interface connected to SAN-A (and likewise for SAN-B).

Air-gapped SAN-A/SAN-B iSCSI networks

Assuming you don’t want to deal with policy routing or VRFs, you could enforce the desired traffic flow with a set of static routes:

- Static route for storage array IP address (or IP prefix) in SAN-A (subnet X or X.1) points to server interface connected to SAN-A (A.1);

- A similar static route is configured for SAN-B.

With the default behavior of TCP stacks in most operating systems:

- Traffic for storage array IP address in SAN-A (or SAN-B) is sent through the server interface connected to the same SAN fabric;

- New TCP sessions established with iSCSI targets in SAN-A therefore use source IP address assigned to server interface connected to SAN-A;

- Assuming a similar setup on the storage array the return traffic uses the same path, resulting in working iSCSI solution and perfect SAN-A/SAN-B isolation.

Unfortunately, most storage vendors don’t want to burden their users (or their internal support teams1) with the host requirements needed to implement a well-designed isolated IP fabrics. It’s much easier to shift the burden to the networking team and mandate a use of a single VLAN2 between iSCSI initiators (servers) and targets (storage arrays), resulting in more complex and more brittle transport fabrics.

More Information

If you’re building a cloud infrastructure, explore the webinars from the Cloud Infrastructure and Software-Defined Data Centers series, in particular the Designing Cloud Infrastructure and Designing Active-Active and Disaster Recovery Data Centers.

If you’re building a data center fabric, start with the Leaf-and-Spine Fabric Architectures and Designs webinar and use the information from Data Center Fabric Architectures webinar when selecting vendors and equipment.

You get all the above webinars with the ipSpace.net subscription.

Next: Conclusions