The Problem

Articles » Redundant Layer-3-Only Data Center Fabrics » The Problem

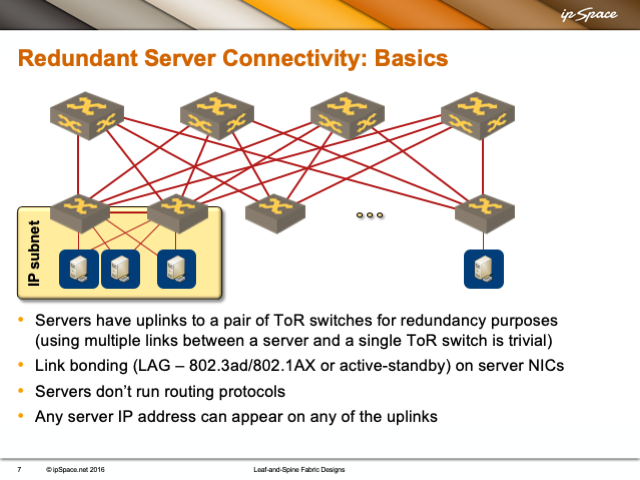

In most small-to-medium-sized leaf-and-spine fabric deployments you’d want to have the servers redundantly connected to two leaf switches (also called top-of-rack or ToR switches) to reduce the impact of a leaf switch failure or software upgrade. Usually this requires a single IP subnet and thus a single VLAN spanning both leaf switches.

Typical implementation of redundant server connections. Source: Leaf-and-Spine Fabric Architectures

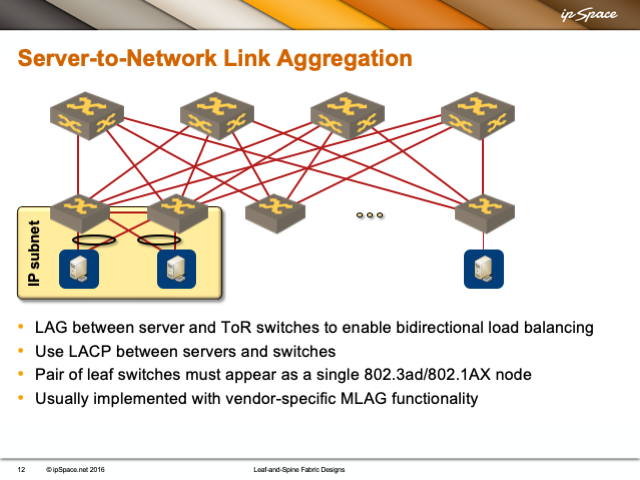

You could configure the server uplinks in active/standby mode, in active/active mode (example: vSphere load-based teaming), or bundle them in a link aggregation group (LAG, aka port channel). No solution is ideal; each one introduces its own set of complexities. For example, the LAG approach requires MLAG functionality on the two ToR switches.

Server-to-fabric link aggregation with MLAG on leaf switches. Source: Leaf-and-Spine Fabric Architectures

Potential Solutions

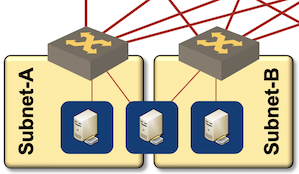

In the ideal world, a data center fabric would be a simple leaf-and-spine layer-3 fabric with IP subnets bound to ToR switches, no inter-switch VLAN connectivity, and no inter-ToR-switch links… assuming the servers could use IP addresses from multiple subnets.

Server residing in two subnets

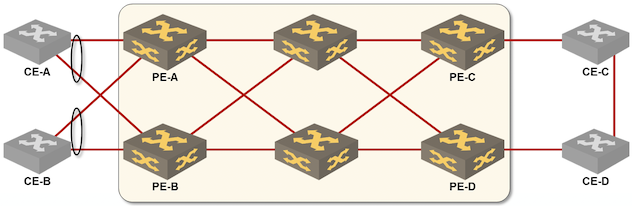

Alternatively, you could use fabric-based solutions to stretch the inter-ToR VLAN across the spine switches instead of using a dedicated inter-ToR-switch link. Several EVPN-based solutions and proprietary solutions like Cisco ACI or Extreme Networks’ SPB implementation can be used to implement this design.

Multihoming with EVPN ESI. Source: EVPN Technical Deep Dive

You could also use one of the routing on IP addresses solutions to stretch a subnet across multiple leaf switches without stretching a VLAN1, for example Cumulus Linux’ redistribute ARP2. Obviously, this approach only works if the servers don’t use anything else but unicast IP.

Finally, the servers could use a routing protocol to advertise their loopback addresses to both ToR switches. This approach works really well for servers, but not for clients. The proof is left as an exercise for the reader; if you feel like you found a good answer do send me an email.

In the rest of this document we’ll focus primarily on the challenges of using server- or client IP addresses from multiple subnets.

-

Rearchitecting layer-3-only networks, https://blog.ipspace.net/2015/04/rearchitecting-l3-only-networks.html ↩︎

-

Layer-3-Only Data Center Networks with Cumulus Linux on Software Gone Wild, https://blog.ipspace.net/2015/08/layer-3-only-data-center-networks-with.html ↩︎

More Information

If you’re building a cloud infrastructure, explore the webinars from the Cloud Infrastructure and Software-Defined Data Centers series, in particular the Designing Cloud Infrastructure and Designing Active-Active and Disaster Recovery Data Centers.

If you’re building a data center fabric, start with the Leaf-and-Spine Fabric Architectures and Designs webinar and use the information from Data Center Fabric Architectures webinar when selecting vendors and equipment.

You get all the above webinars with the ipSpace.net subscription.