Building Next-Generation Data Center

BGP in EVPN-Based Data Center Fabrics

ipSpace.net » Documents » Using BGP in a Data Center Leaf-and-Spine Fabric » BGP in EVPN-Based Data Center Fabrics

In this section, we’ll focus on running EVPN with VXLAN or MPLS encapsulation within a single data center fabric and not consider the implications of running EVPN between data center fabrics, where a robust implementation would need at least for some minimal broadcast domain isolation on fabric edge.

Similar to MPLS/VPN, EVPN uses an additional BGP address family that can be used with IBGP or EBGP sessions. However, EVPN technology assumes the traditional use of BGP as endpoint reachability distribution protocol working in combination with an underlying routing protocol:

- BGP next hops advertised in EVPN updates point to egress Label Switch Router (LSR) or VXLAN Tunnel Endpoint (VTEP);

- Underlying routing protocol computes the best path(s) to BGP next hops;

- A BGP router that changes the BGP next hop in an EVPN update must also perform data plane decapsulation and re-encapsulation.

Overview of Design Options

You can implement an EVPN-based data center fabric using a combination of IGP, IBGP and/or EBGP. Use the following decision tree to decide whether individual options described later in this section might be a good fit for your needs:

- If you decided to use an IGP routing protocol in your data center fabric (see the introductory part of this document for more details), use IBGP on top of an IGP underlay;

- If you decided to use BGP as the underlay routing protocol, and your chosen vendor provides a robust and easy-to-use implementation of EVPN over EBGP, use EBGP-only EVPN design;

- If you cannot use IGP routing protocol in your data center fabric, and your chosen vendor discourages the use of EVPN route servers on spine switches, you might have to use a combination of IBGP-based EVPN on top of EBGP-based underlay routing;

- If your fabrics requires extremely large number of prefixes that cannot be handled by data center switches acting as route reflectors or route servers, you’ll have to use VM-based route reflectors or route servers. IBGP-based EVPN on top of EBGP-based underlay might be your only option.

EVPN Using IBGP with IGP

The easiest way to build an EVPN-based data center fabric is to:

- Use an IGP (OSPF or IS-IS) as the underlay fabric routing protocol;

- Use IBGP between leaf switches to exchange EVPN updates.

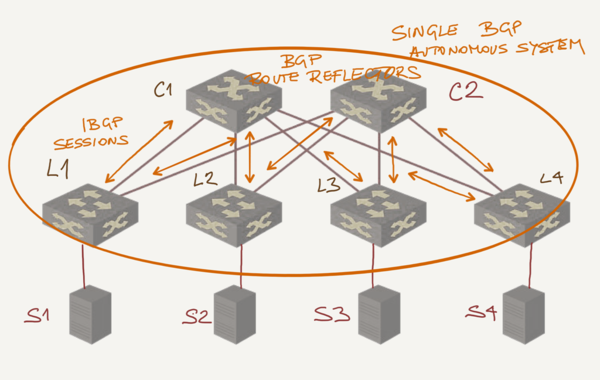

In fabrics larger than a few switches you should deploy BGP route reflectors. Spine switches are usually an acceptable choice assuming their CPU and memory can handle the load. Larger fabrics could use dedicated BGP route reflectors running on bare-metal servers or as virtual machines.

EVPN Using EBGP without an Additional IGP

If you decided to use EBGP as your underlay fabric routing protocol due to large number of switches in your fabric or some other design criteria, you could run EVPN as an additional address family over existing EBGP sessions.

However, as the spine switches should not be involved in intra-fabric customer traffic forwarding regardless of whether your implementation uses MPLS or VXLAN encapsulation, the BGP next hop in an EVPN update must not be changed on the path between egress and ingress switch – the BGP next hop should always point to the egress fabric edge switch.

Conclusion: if you want to exchange EVPN updates across EBGP sessions within a data center fabric, the EVPN implementation must support retaining the original value of BGP next hop on outgoing EBGP updates (similar to Inter-AS Option C in MPLS/VPN world).

Not all vendors have seamlessly integrated EVPN with EBGP, so you might encounter interesting vendor limitations like:

- EVPN is supported only on IBGP;

- EVPN is supported on EBGP but the vendor does not support next-hop-unchanged on EBGP sessions;

- Configuring EVPN over EBGP involves many more configuration tweaks than configuring EVPN with IBGP.

In these cases, you might be better off using EVPN over IBGP sessions with an IGP as the underlay fabric routing protocol.

IBGP-Based EVPN on Top of EBGP-Based Fabric Routing

Several vendors advocate designs using IBGP between leaf switches on top of intra-fabric EBGP. Some of these designs use the same AS number on all leaves (requiring allowas-in configuration on leaf switches); others use local-as to pretend the leaf switches are in the same autonomous system as the route reflectors.

These designs are more complex and harder to troubleshoot than simpler IBGP+IGP or EBGP-only designs and should be avoided unless you have a very large fabric (making IGP impractical) or expect a very large number of EVPN prefixes that would exceed the control-plane (CPU/main memory) capability of the spine switches.

More information

- Leaf-and-Spine Fabric Architectures webinar gives you plenty of Data Center BGP design guidelines, including introduction to EVPN. The EVPN Technical Deep Dive webinar covers EVPN in more details.

- If you need a deeper understanding of data center fabric designs, check out the Designing and Building Data Center Fabrics online course.

- A good data center networking engineer or architect understands the networking technologies and design guidelines. A great networking engineer or architect understands the needs and limitations of the whole stack of technologies and architectures, including compute, storage, and network services. You'll get that in the Building Next-Generation Data Center online course.

Table of Contents

- Introduction

- BGP as a Data Center Fabric Routing Protocol

- Autonomous Systems and AS Numbers

- BGP in EVPN-Based Data Center Fabrics

- EVPN Route Target Considerations

Upcoming

- Performance Impact of Running EVPN over EBGP Sessions

- Impact of MPLS/VPN