Building Next-Generation Data Center

Build an Optimized Fabric

ipSpace.net » Documents » Optimize Your Data Center Infrastructure » Build an Optimized Fabric

Let’s review how far we got. We:

- virtualized all servers;

- migrated to 10GE (or 25GE);

- reduced the number of server uplinks (and switch ports);

- distributed the storage across hypervisor hosts, and

- virtualized network services.

The final result: every compute instance (including network services) is virtualized, and you can put them on a much smaller number of hypervisor hosts than what you started with.

Building Network Services Cluster

Unless your environment is very small and you cannot justify the extra investment, run the virtualized network services appliances on separate physical servers for security and performance reasons.

If your virtual appliances connect directly into a public network, consider using dedicated Ethernet interfaces to connect to the public network, resulting in a complete separation between internal data center fabric and external network as shown on the following diagram:

Building Physical Switching Infrastructure

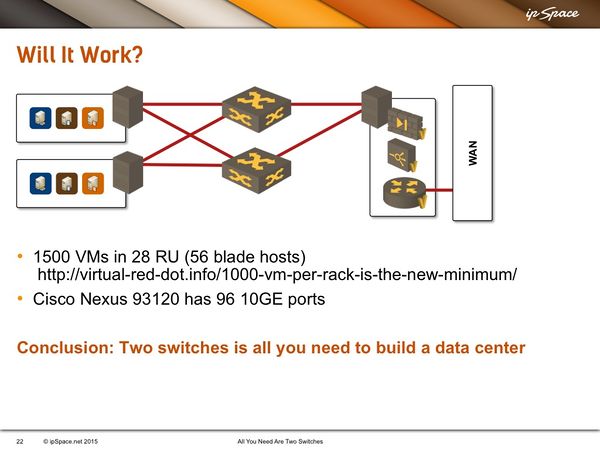

How many switches do you need in your optimized data center? A rough guestimate would indicate that you don’t need more than two switches for ~2,000 VMs. Iwan Rahabok did a more thorough analysis and calculated that you can run ~1000 VMs in a single rack and connect all ESXi hosts needed to do that (he used very conservative 30:1 virtualization ratio) to two ToR switches.

Every single data center switching vendor is shipping 64 port 10GE or 25GE switches. Sometimes the datasheets claim the switches have 48 10GE ports and 4-6 40GE ports, but you can usually split the 40GE ports into four 10GE lanes (or 100GE ports into four 10GE or 25GE lanes).

There are also numerous vendors (including Cisco) that have 1RU or 2RU switches with more than 64 ports per switch – ranging from 96 10GE ports (Cisco 93120) to 128 10GE ports available on some Dell, Arista or Juniper switches.

To summarize: If your data center has less than approximately 2,000 servers (physical servers or VMs), and you can virtualize them, you probably don’t need more than two data center switches. Most enterprise data centers are definitely in this category.

Discussion Questions

How do you implement layer-2 multipathing in such a simple data center unless you’re using TRILL (or FabricPath) or VXLAN?

In a simple two-switch network you use one of the switches as the primary switch for all the VM traffic, and the other switch as the primary switch for the storage traffic, so there’s no need for load balancing or multipathing.

If you have more than two switches, then obviously you want to have some multi-pathing because you don’t want to be limited by the spanning tree considerations. In those cases I’d strongly recommend using VXLAN on ToR switches or (if your switches don’t support VXLAN) MLAG between the edge and the core switches.

If you’re splitting iSCI and VM traffic between the two switches, what method would be best for failover in case of a single switch failure?

Obviously, you have to have the same VLAN on both switches which means that your two-switch network would be a pure layer-2 network with bridging running across the inter-switch link.

Next: configure simple failover on your vSphere or KVM hosts. For every type of traffic make one uplink primary and the other uplink a backup.