Building Next-Generation Data Center

Ditch Legacy Technologies

ipSpace.net » Documents » Optimize Your Data Center Infrastructure » Ditch Legacy Technologies

If you want to further optimize your data center network, you should ditch the legacy networking technologies, in particular Gigabit Ethernet, as the primary server-to-network connectivity.

A Brief Look Into the Past

While there were early adopters considering 10 Gigabit Ethernet (10GE) in early 2010s we were still mostly recommending Gigabit Ethernet (GE) server connections primarily because GE was a well-known field-tested technology already present on majority of server motherboards. It also supported copper cabling, which was not yet available for 10GE.

The primary drawback of GE connections was the large number of interfaces you needed on a single server. VMware design guidelines recommended between four and ten GE interface cards for ESX host (two for user data, two for storage, two for vMotion…). It was also impossible to consolidate storage and networking, and there was no way to implement lossless transfer for storage on GE links without impacting the regular network traffic.

Data centers using 10GE connections in those days already enjoyed faster vMotion and converged storage and networking infrastructure. Using 10GE NICs also allowed you to reduce the number of physical interfaces, although you mostly had to use hardware tricks like Cisco’s Palo Network Interface Card (NIC) that allowed you to split the physical NIC into multiple virtual NICs and use those virtual NICs to implement the VMware design guidelines as ESXi had no built-in QOS in those days.

Current Recommendations

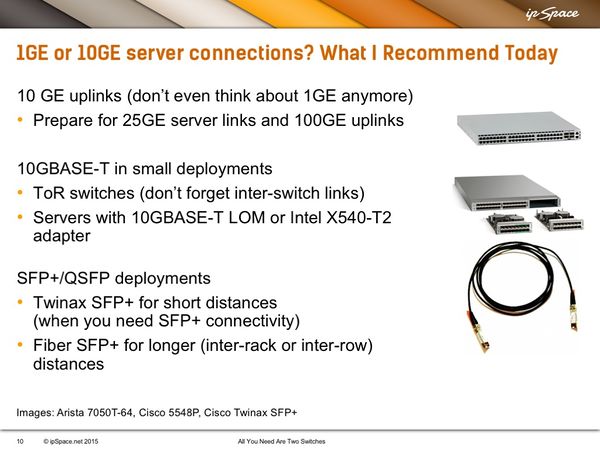

If you’re building a new data center networks, please don’t use GE as your primary server connectivity technology; use it only for the out-of-band management network. You should use 10GE interfaces for primary server connections and consider switches that already support 25GE connections as we can expect 25GE NICs on server motherboards in a few years.

10GE offers numerous connectivity options these days:

- You can use 10GBASE-T if you want to use copper cables within a rack or a small pod. Switches with 10GBASE-T ports are available from most data center switching vendors, and it’s easy to buy servers with built-in 10GBASE-T interfaces.

- If you decide to use SFP+ or QSFP interfaces, use Twinax for short distances and fiber connectivity for longer distances.

Real-Life Case Study

In late 2014 I was invited to review a data center design considered by a financial organization upgrading the network in their data center.

They were replacing old Catalyst 6500 switches with new data center switches from another vendor. The new switches had only 1GE server connections and 10GE uplinks, so I had to ask them “Why you doing this? It's 2014, why aren't you buying 10gig links?” The reply was not unexpected: “all servers we have in our data center have only GE NICs”.

Next question: “Okay, so you're buying switches with GE interfaces that will become obsolete in a few years. How will you connect next-generation servers with 10GE NICs that will eventually be introduced in your data center?”

Unfortunately while they completely understood that they were making suboptimal choice, there was nothing they could do – servers were purchased using a different budget and thus server upgrades were never synchronized with network upgrades. Furthermore, as most servers required numerous GE connections, buying 10GE switch ports to support them would be way too expensive.

Conclusion: if you want to consolidate your data center and optimize your costs, you have to do it considering all components within the data center (servers, storage and networking), and when necessary upgrade all components at the same time.

Discussion Questions

We've been looking at moving to 10GE but are turned off by the high cost of genuine SFPs and vendors don't seem willing to support Twinax in heterogeneous environment, for example when using Cisco switches with IBM servers, so we prefer fiber for 10GE connectivity.

There was a great blog post on Packet Pushers not so long ago explaining how much an SFP costs when you buy it from Original Device Manufacturer (ODM), and for how much the networking vendors are selling them.

You do have to keep in mind that networking vendors have to operate at high margin for financial reasons, which is one of the reasons small items like RAM and SFPs cost more when you buy them from those vendors, but some of the ratios mentioned in that blog post were ridiculous.

Unfortunately it seems that the major data center networking vendors use the SFP costs as hidden per-port licensing, reducing the cost of the base hardware and earning more on SFPs you have to buy from the same source.

Maybe things will get better with whitebox switches coming on the market and data center engineers slowly figuring out that you could use that approach in some environments, but it will take a long time till enterprise data centers will consider whitebox switches running third-party software a viable approach.