Building Next-Generation Data Center

Reduce the Number of Server-to-Network Uplinks in Your Data Center

ipSpace.net » Documents » Optimize Your Data Center Infrastructure » Reduce the Number of Server-to-Network Uplinks in Your Data Center

An easy way to minimize the amount of transceivers, cables, and networking equipment in a data center is to minimize the number of uplinks per server. As easy as that sounds, the path to that goal is rarely trivial.

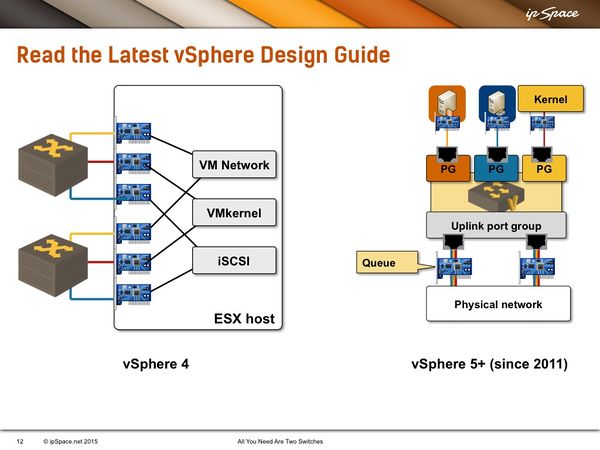

The first obstacle you might encounter while trying to do that are virtualization architects using obsolete vSphere design practices. The following diagram compares VMware recommendations for ESXi version 4 and newer recommendations for ESXi versions 5 and above.

With vSphere version 4 had no QoS, and thus VMware recommended using separate interfaces for VM traffic, for kernel traffic like VMotion, and for storage traffic, resulting in at least six network interfaces per server (considering minimum redundancy).

In vSphere 5 VMware implemented rudimentary QoS called Network I/O Control (NIOC), and started recommending two 10GE interfaces per server with QoS configured on server uplinks to allocate bandwidth to VM traffic, storage and vMotion.

What’s the impact of the changes in the recommended design as you go from an old server running vSphere 4 to a new more powerful server running vSphere 5 or 6? You can increase the virtualization ratio (for example, going from 10VMs per server to 50 VMs per server) while at the same time reducing the number of interfaces.

If you had a 48-port Gigabit Ethernet switch less than a decade ago, you could connect eight servers with 80 VM’s to it. Today you can connect 24 servers running over a thousand VM’s to a single 48-port 10GE switch – an enormous reduction in the network size and corresponding complexity.

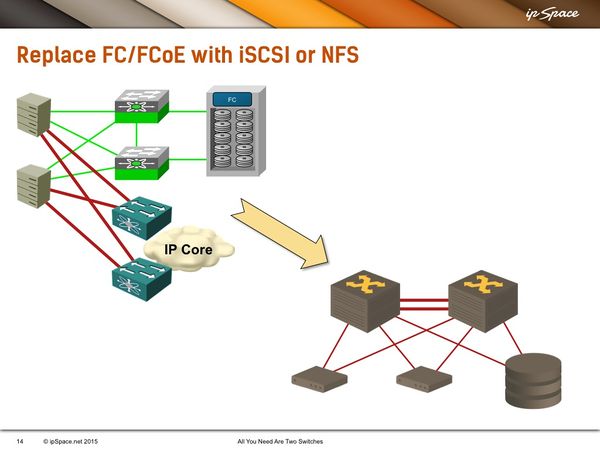

After consolidating the server network interfaces, it’s time to redesign the storage network. Traditional servers had Ethernet interfaces dedicated to VM or management traffic, and separate Fibre Channel storage interfaces. Modern designs consolidate all traffic on a single set of uplinks and use IP-based storage or FCoE.

Using IP-based storage (NFS or iSCSI) instead of Fibre Channel results in the greatest reduction in port count and complexity:

- The number of server uplinks (and corresponding optics and cables) is reduced by half;

- The number of access (leaf) switches is reduced by half;

- You’re removing a whole technology stack.

If you still need Fibre Channel support in your data center (for example, to connect tape backup units), try to consolidate the server uplinks. Replace the separate LAN and SAN interfaces in each server (a minimum of four ports) with two 10GE ports running FCoE.

Consolidating fiber channel and Ethernet between servers and access switches reduces the number of management points by a factor of two as you no longer need separate access switches for Fibre Channel and Ethernet.

Some storage architects prefer to keep iSCSI networks separate from the data networks, or at least use different access-to-core links and dedicated core switches for the iSCSI part of the network.

However, if you already performed the other consolidation steps, it’s easy to set up the servers to forward VM data primarily through one access switch, and use the other access switch primarily for iSCSI or NFS (with each switch serving as a fully functional backup for the other switch).

End result: As long as all ports are operational, your VM data and your storage data never mix – they’re on two different VLANs and using separate access switches. But if one of the access switches crashes or a server-to-switch link fails, the traffic falls over to the other link/switch automatically.

Table of Contents

- Introduction

- Virtualize the servers

- Stop using legacy technologies

- Reduce the Number of Server-to-Network Uplinks

- Use Distributed File System

- Virtualize Network Services

- Build an optimized fabric