Building Next-Generation Data Center

Virtualize the Servers

ipSpace.net » Documents » Optimize Your Data Center Infrastructure » Virtualize the Servers

The first step on your journey toward an optimized data center infrastructure should be a radical virtualization. However, it’s amazing how many people haven’t finished the virtualization process yet.

On the other hand, lots of enterprises virtualized 95% or more of the workload and the only applications they’re running on bare-metal servers are large databases or applications where the vendor requires the application to be run on physical servers.

Once you’ve virtualized the workload and became flexible, you can start right-sizing the servers. Modern servers support CPUs with large number of cores per CPU and large number of CPU sockets per server. Based on that, it’s not uncommon to run over 50 reasonably sized VMs (4GB of RAM, 1 vCPU) on a typical data center server.

To optimize the servers to your environment follow this process (assuming you know the typical VM requirements):

- Select server type that might be a good fit.

- Based on the number of CPU cores supported by the selected server, typical VM size, and desired vCPU oversubscription ratio, figure out how many VMs you could run on such a server.

- Based on the number of VMs and typical VM RAM usage calculate the amount of RAM you’d need in the server.

- Figure out what the limiting factor is, be it number of cores or RAM, and repeat the exercise until you get a server model with optimum price per core ratio.

Usually the mid-range servers give you the best price-per-core ratio, but as server vendors continue to roll out new models, you might have to redo the whole exercise every time you’re buying a new batch of servers.

Is High-Density Virtualization Realistic?

When I explain the concepts of high-density virtualization I usually get two types of responses: (A) yeah, we’re already doing that or (B) nobody in his right mind would be doing it.

As always, it’s better to base your decisions on data than on opinions. Frank Denneman (when working for PernixData, now Nutanix) published a number of blog posts describing anonymized statistics they collected from thousands of customer ESXi (vSphere) servers. This is what they found in their survey:

- Most servers have two sockets (84%) and between 8 and 24 cores (25% having 16 cores). Most common server configuration is thus a 2-socket server with 8 cores per socket.

- Most ESXi hosts have between 192 and 512 GB of memory with almost 50% having between 256 GB and 384 GB of memory.

Assuming a typical small VM would consume 4 GB of memory, it’s possible to run 60 to 70 VMs on an average server described in the PernixData survey, and even with larger VMs and some oversubscription 50+ VMs per server is reasonable.

The other blog post Frank published covered the VM density data and that one was even more interesting because the density data was all across the board, from less than 10 VMs per host to more than 250 VMs per host. However, once I removed the low-end outliers, 70% of the hosts surveyed had more than 20 VMs and 34% of the hosts surveyed have more than 50 VMs. The obvious conclusion: your peers are packing a large number of VMs onto their ESXi hosts.

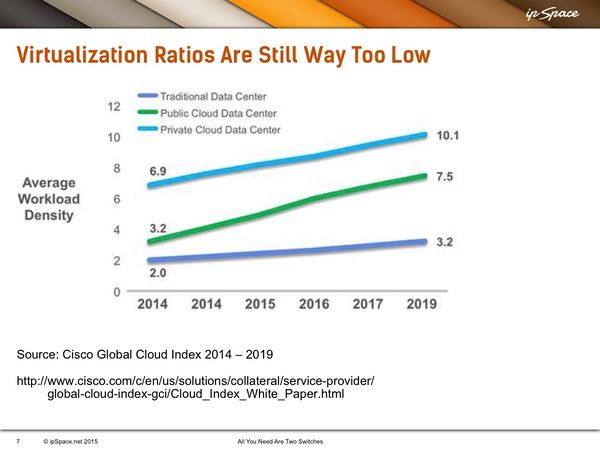

The other source of data I have is from Cisco’s Global Cloud index, which shows much lower VM density. It could be that cloud providers use low-end servers, or it may be that people interviewed in that survey haven’t started virtualizing their workloads at large scale.

Long story short: You can easily fit tens of server VMs, on a mid-range physical server. As the first consolidation step get rid of bare-metal servers, and squeeze your workload into as few optimally sized virtualization hosts as possible.

Discussion Questions

Have you seen people running humongous VMs like databases, particularly in-memory databases, or do they prefer to use bare-metal servers for that?

I’ve seen both public cloud providers and enterprise data centers running humongous VMs (30+ cores, 1 TB of RAM), often in a single VM per server setup.

They’re virtualizing huge workloads to simplify the maintenance: running a database server in a VM allows them to decouple the hardware maintenance from the server maintenance.

For example, if they have to upgrade the server, change the hardware, or upgrade the hypervisor kernel, they can move the customer VM to another equivalent server, and do the hardware or hypervisor maintenance.

Alternatively, if you have to upgrade your virtualized server (add more CPU or RAM), the provider can move your VM to a more powerful physical server, and then just change the virtual RAM and virtual CPU settings without impacting the VM uptime. Worst case, you would have to restart the VM, which is orders of magnitude faster than having to open the physical server, and install the additional RAM or CPU.

Most customers running very large VMs run a single VM per physical server, but still run the workload virtualized due to the benefits explained above. However, you have to be careful when running VMs with very high number of CPU cores. For example, if your database VM requires 32 cores, it won’t be scheduled to run unless at least 32 cores are available. Running a 32 core VM on a 32 core server will result in degraded performance: every time the hypervisor will have to run another task the VM will be stopped and most cores will be idle. Make sure you have more cores in your physical server than the number of cores the VM requires.

Have there been any good use cases of virtualized Hadoop clusters?

There are two aspects to this question:

- How flexible do you want to be?

- How many resources can a server consume?

If your servers (or jobs running on them) are long-lived, it makes sense to virtualize them. If you’re scheduling independent short-lived jobs with no persistent data on those servers, then it doesn’t matter whether you virtualize them or not – you can always take them offline.

Coming to the other aspect: if your Hadoop servers could consume all the CPU cores (or all RAM) in your physical server (assuming you did the exercise and found an optimal physical server configuration), then it may not make sense to virtualize Hadoop cluster apart from the maintenance/upgrade benefits.

However, if the workload running on the server cannot consume all the RAM and all the CPU resources available in that physical server, then yet again it makes sense to use virtualization to squeeze more out of the physical resources that you bought.

There’s no universal answer. The only way to figure out whether you could squeeze more out of the existing hardware is to monitor the resource utilization of your physical servers.